Concerns over child exploitation on TikTok have resurfaced following claims that the social media giant is profiting from sexually suggestive livestreams performed by minors. A BBC investigation has uncovered that young women, some as young as 15, have been using TikTok to promote and negotiate payments for explicit content shared on other messaging platforms.

Despite TikTok’s policy against solicitation, former content moderators have alleged that the company is fully aware of these activities but has not taken sufficient action to prevent them. Reports indicate that TikTok takes a substantial cut—up to 70%—from all livestream transactions, raising concerns over its financial incentives.

In response, TikTok stated that it has “zero tolerance for exploitation” and maintains that it has implemented safeguards to protect underage users.

Rising Cases of Exploitative Livestreams

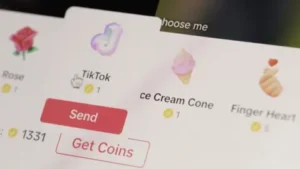

Investigations revealed that TikTok livestreams featuring explicit content have become increasingly popular in Kenya, particularly during late-night hours. In multiple livestreams monitored over a week, performers were seen dancing suggestively while encouraging viewers to send emoji “gifts”—a form of virtual currency that can be converted into cash.

Coded language was also used to bypass moderation, with phrases such as “Inbox me for kinembe” (a slang term for clitoris) directing viewers to initiate private conversations where further explicit content was offered.

A former TikTok moderator, speaking under the pseudonym Jo, claimed that the company benefits financially from these livestreams and has little incentive to enforce stricter policies. “The more people send gifts, the more revenue TikTok generates,” Jo said.

Also, read: Actor, Joselyn Dumas Appointed Deputy Director for Ghanaian Diaspora Affairs

TikTok’s History of Alleged Inaction

This is not the first time TikTok has faced scrutiny over child exploitation. A 2022 internal investigation reportedly raised similar concerns, but moderators claim the platform has not taken significant action.

Last year, the U.S. state of Utah filed a lawsuit against TikTok, accusing it of profiting from such activities. The company denied the allegations, stating that the lawsuit ignored the “proactive measures” it had taken to enhance user safety.

Kenya has become a hotspot for these activities, according to ChildFund Kenya. The combination of widespread internet access, high youth unemployment, and weaker content moderation compared to Western nations has contributed to the problem.

Moderation Challenges and Loopholes

Moderators working for TikTok’s third-party contractor, Teleperformance, have spoken out about the platform’s shortcomings in content moderation. Jo explained that moderators are provided with a list of banned words and actions, but these guidelines fail to capture slang and non-verbal cues commonly used to solicit explicit interactions.

“You can tell by their body language—the way they position the camera, the way they move—that they are advertising something more, but if they don’t explicitly say it, we can’t take action,” Jo revealed.

Another moderator, identified as Kelvin, stated that TikTok’s increasing reliance on artificial intelligence (AI) for content moderation has made it even harder to detect these violations. AI, he claimed, struggles to recognize local slang and gestures used to bypass moderation.

A Growing Underground Economy on TikTok

For many young users, these livestreams have become a source of income. Several teenagers and young women have admitted to spending up to seven hours per night engaging in these activities, earning an average of £30 per day.

One 17-year-old, referred to as Esther, told investigators that she started performing on TikTok at the age of 15. “I sell myself on TikTok. I dance naked because that’s how I make money to support myself,” she said.

Living in a Nairobi slum, where thousands of residents share limited facilities, Esther said her earnings help her feed her child and support her mother, who has been struggling financially since the death of her father.

Despite TikTok’s age restrictions—users must be at least 18 to livestream and have a minimum of 1,000 followers—many minors continue to bypass these rules, often with the help of older users.

Calls for Stronger Regulations

Child protection organizations are calling for stricter regulations and stronger enforcement mechanisms to prevent the exploitation of minors on TikTok. Advocates argue that the platform’s financial interests in livestream transactions may be preventing it from fully addressing the issue.

With increasing legal scrutiny and mounting pressure from watchdog organizations, TikTok is now facing tough questions about whether it is genuinely committed to eliminating child exploitation or if its profit-driven model is undermining its safety measures.